llms.txt is a file added to your site to help answer engines understand it effectively.

Thanks to this, answer engines like ChatGPT, Copilot, and Perplexity can process your website’s content accurately and efficiently.

It is currently just a proposal and not yet a widely accepted technology.

However, as its usage is spreading rapidly, it has a high potential to become a standard.

Even though its power mainly comes from widespread adoption, it can still generate significant benefits even when used individually.

Therefore, it’s important to get acquainted with the llms.txt standard as soon as possible.

A Brief History of llms.txt

Large language models, although a major revolution, have struggled in certain areas.

Especially in parsing complex HTML structures, advertisements, and JavaScript code.

This posed a major problem for extracting accurate and complete information from the internet.

In fact, even a transformative technology like LLMs was almost helpless when it came to understanding JavaScript-based content.

As a solution, on September 3, 2024, Jeremy Howard, founder of Answer AI, proposed the llms.txt standard.

This proposal involves converting a website’s content into a concise and structured format, eliminating the need to parse HTML, ads, or JavaScript.

Thus, answer engines would be able to access your main content in the fastest and easiest way possible.

Why It Matters?

There are so many new terms and technologies that it’s hard to even understand what is important.

To be honest, some of them are just a waste of time.

But this time, the matter is serious.

Here’s why the llms.txt standard is important:

- If your content is not being discovered by answer engines like ChatGPT, llms.txt can help them detect and process it.

- Answer engines have limited attention spans, and llms.txt ensures their focus on your vital information, preventing the waste of your context window. Otherwise, this crucial content could remain buried within thousands of words of HTML and be overlooked.

- Even if you don’t comply with this standard, they will still crawl you — but poorly. They might miss the most relevant content, misinterpret it, or prioritize outdated/wrong pages. You don’t want to present your users with information that looks like it came out of a time machine.

- It could become a default practice at any moment, and naturally, your competitors might be rewarded just for moving faster than you.

💡 Analogy: llms.txt is like an air traffic control tower. It tells LLMs which information should land and which should remain in holding patterns.

👉 For example… an e-commerce site could highlight its popular products, or a software library could emphasize its getting started guide.

📌 Many of the reasons that make llms.txt important… are also what make answer engine optimization important. So don’t forget to check out our article: Why AEO Matters: Top 15 Reasons

Understanding the Problems that Led to the Emergence of llms.txt

To grasp why this proposal is critical, we need to dig a little deeper.

When large language models encountered the internet, several serious problems arose:

- Complex HTML structures: Web pages often contain not only text but also various formatting tags, links, and other HTML elements. Parsing this structural complexity and extracting meaningful content was challenging for answer engines.

- Other distracting elements: Ads, navigation menus, and other visual elements on web pages made it harder for answer engines to focus on the main content, leading to misinterpretations, loss of context, and errors caused by noisy data.

- JavaScript interactions: While answer engines can usually process static HTML, they cannot execute JavaScript. This prevented them from fully understanding the final state of a page and often caused them to treat such content as noise. Given that many websites heavily rely on JavaScript, this was a major issue.

- Limited context window: The amount of text an answer engine can process at once (context window) is limited. Unnecessary HTML and JavaScript cluttered valuable context space, making it harder to focus on key information.

- High token consumption: The number of tokens used when interacting with answer engines depends on the amount of processed data. Processing unnecessary code increased costs for users.

llms.txt provides either direct or indirect solutions for all these problems.

The llms.txt Standard Proposal: An Overview

The llms.txt standard is an innovative approach designed to solve the problems answer engines face when interacting with websites.

It helps answer engines retrieve textual content from web pages in a cleaner and more efficient manner.

The underlying logic is quite simple:

→ Create a basic .txt file that contains only the main textual content, in a format that answer engines can easily read and process, and place it in the root directory of your website.

However, although it sounds simple, this mission is crucial for answer engine optimization efforts.

📝 A Quick Note: llms.txt uses Markdown format. Because currently, Markdown is the most common and easily understandable format for language models.

Benefits of llms.txt

- When answer engines focus on clean content instead of complex structures, they can understand key information such as technical documentation, API specifications, and educational content more accurately and completely.

- Eliminating distractions like ads, menus, footnotes, and comments without additional effort allows LLMs to focus solely on meaningful information. This boosts the model’s performance and enables it to produce more accurate responses.

- It indirectly addresses JavaScript access issues by encouraging website owners to provide a static copy of the main content.

- Reducing the amount of data that answer engines need to process helps users consume fewer tokens during AI interactions, thereby reducing costs.

- The reduced need for computational power decreases server load and energy consumption, improving overall resource efficiency.

- llms.txt files are easy to download and process. This simplifies and accelerates data collection processes, makes them more reliable, and less vulnerable to structural changes on the website.

- It allows website administrators to control which answer engines can access their sites. Especially for news sites, blogs, and private content platforms, it helps protect unique content from unauthorized data extraction and supports ethical data usage.

⚙️ Another technical benefit is… its dual readability: it is both human- and LLM-readable. Furthermore, it allows for fixed processing methods such as parsers and classic programming techniques like regex.

💬 The feedback… arising from isolated company practices, as shown in the example, indicates that the llms.txt is working. However, it’s important to note that in order to create a larger impact and truly become an AEO standard, it must be widely adopted.

The 3 Core Components of the llms.txt Standard

The llms.txt standard offer fundamentally consists of three components:

1. llms.txt:

Located in the root directory of the website. It provides answer engines with an index, a roadmap, and a summary about the site, specifically pointing to answer-engine-friendly pages [typically in markdown (.md) format].

Its primary purpose is to inform LLMs about which content is more suitable for them and how they can access it.

It typically includes information such as:

- Brief descriptions of important content sections.

- Links leading to detailed information (mostly in .md format).

- Alternatively, a reference to the llms-full.txt file where all the content is directly available.

👇 The file looks something like this:

# Sustainable Architecture Knowledge Hub

> A resource center focused on sustainable building, eco-friendly materials, and energy efficiency.

Curated for large language models to access answer-friendly content in markdown format.

## Introduction to Sustainable Architecture

- [Foundational Concepts and Case Studies](https://example.com/content/sustainable-architecture-intro.md): Covers principles, design strategies, and real-world examples.

## Green Materials Guide

- [Eco-Friendly Building Materials](https://example.com/content/green-materials-guide.md): Categorized descriptions of sustainable materials.

## Energy-Efficient Design

- [Low-Energy Design Techniques](https://example.com/content/energy-efficient-design.md): Strategies and technologies for reducing energy consumption.

## FAQ & Quick Answers

- [Common Questions Answered](https://example.com/content/faq.md): Concise responses to frequently asked questions.

## Full Content (Optional)

- [Full Index for LLMs](https://example.com/llms-full.txt)👇 …or a brief outline:

# Title

> Optional description goes here

Optional details go here

## Section name

- [Link title](https://link_url): Optional link details

## Optional

- [Link title](https://link_url)2. .md Extension Pages (Detailed Content)

This involves offering a Markdown version of each web page, accessible by adding a .md extension to the HTML version.

It means the key content itself is directly provided in a clean and structured Markdown (.md) format.

This format allows answer engines to easily parse the content without distractions.

Each Markdown version delivers the pure content itself, in a clean and structured format.

Thus, answer engines do not have to deal with any distracting code structures.

Example:

# Quick Snack Idea: Peanut Butter Banana Toast

A fast and healthy snack you can prepare in under 5 minutes.

## Ingredients

- 1 slice of whole grain bread

- 1 tablespoon peanut butter

- 1 ripe banana

## Instructions

1. Toast the bread.

2. Spread peanut butter evenly.

3. Slice the banana and place on top.

## Tip

Add a sprinkle of cinnamon or chia seeds for extra flavor.

---

*This Markdown version provides clean, structured content for easy parsing.*3. /llms-full.txt (Comprehensive Content File)

This file can also reside in the root directory of the website and contains the full textual content (or the parts most important for answer engines) all in one file.

How Does It Work?

The /llms.txt file provides the answer engine with an overview of the website’s structure and key contents.

If the website supports .md extension pages, /llms.txt contains direct links to these pages, and the answer engine can follow them to access detailed and clean content.

Alternatively, the /llms.txt file can contain a reference to the /llms-full.txt file, where the entire content is consolidated. In this case, the answer engine can read all the necessary information from a single file.

Website developers can choose the approach (or a combination of approaches) that best suits their site’s structure and the usage scenarios of answer engines.

For example… a large website might provide both a llms.txt index and .md versions of key pages, whereas a smaller site might simply prefer to offer everything through a single llms-full.txt file.

🔑 Key takeaway: llms.txt acts as an index and routing mechanism, .md pages represent the clean and structured target content, and llms-full.txt serves as an alternative where all (or most) important content is gathered in one place.

👩💻 Pro tip: While llms-full.txt offers general context, .md files provide in-depth knowledge. To ensure a comprehensive understanding, it’s strategic to use both simultaneously.

👩💻 Some AI tools… allow you to paste a single link to load information directly into their context windows. Instead of pasting dozens of links individually to assistants like Windsurf, you can supply a comprehensive file using /llms-full.txt.

How Do Answer Engines Use the llms.txt File?

When an LLM is looking for an answer to a question or running a knowledge-gathering task, it checks a site’s llms.txt file first.

It works like a guide and operates in three steps:

1. Mapping the Roadmap

First, the LLM fetches the site’s /llms.txt file.

Instead of crawling the entire site page by page (HTML), it quickly learns which sections are important.

It’s like knowing which rooms are relevant before entering a building.

2. Fetching Clean, Direct Information

Next, it follows the listed links and downloads the related content (usually documents in .md format).

These documents are clean—free from ads, navigation menus, or other distractions.

Only the useful information remains.

3. Deciding How Much Information is Needed

Finally, the system checks: “Is this document mandatory or optional?“

If a document is marked as “optional”, it retrieves it only if necessary.

This saves memory and increases speed by avoiding irrelevant data.

Example:

If a user asks an LLM: “How can I create a new blog post on NovaPress?”, the model would:

- Check https://docs.novapress.dev/llms.txt

- Find the ## Guides section and locate the linked documents.

- Download and review a file like

creating_blog_posts.md.

Then, based on this clean, structured document, guide the user step-by-step through the blog post creation process.

Thus, the model accesses the most accurate information directly without needing to crawl the entire website.

How to Create an Effective llms.txt File?

There are no strict rules about how exactly an llms.txt file should be written—it depends entirely on your answer engine optimization strategy.

However, to create effective llms.txt files, you should follow these principles:

- Use concise and clear language.

- Provide brief and informative explanations alongside resource links.

- Avoid vague terms and technical jargon—they are contrary to the purpose of answer engines.

Also, before writing this, test a series of language models to see if they can answer questions based on your content.

And, it should include:

- A single H1 page title (this is technically the only required element).

- A short summary and essential basic information needed to understand the file.

- Markdown sections like paragraphs and lists that explain the project and how to interpret the provided files.

- “File lists” divided by H2 headings, each listing name links with optional notes.

⚠️ Even though… everything looks simple on paper, during integration you may need additional tools or plugins, especially for JavaScript applications.

Practical Ways to Create an llms.txt

It’s important to know what you’re doing when creating it.

However, there are many basic yet effective llms.txt generators available.

Roughly, they work like this:

- You provide the URL of your homepage.

- You select which types of links you want to extract—titles, navigation, footer, etc.

- You click Submit, and your file is prepared.

- You copy the output and save it in your server’s root directory.

In many cases, you might not even have to manually upload it.

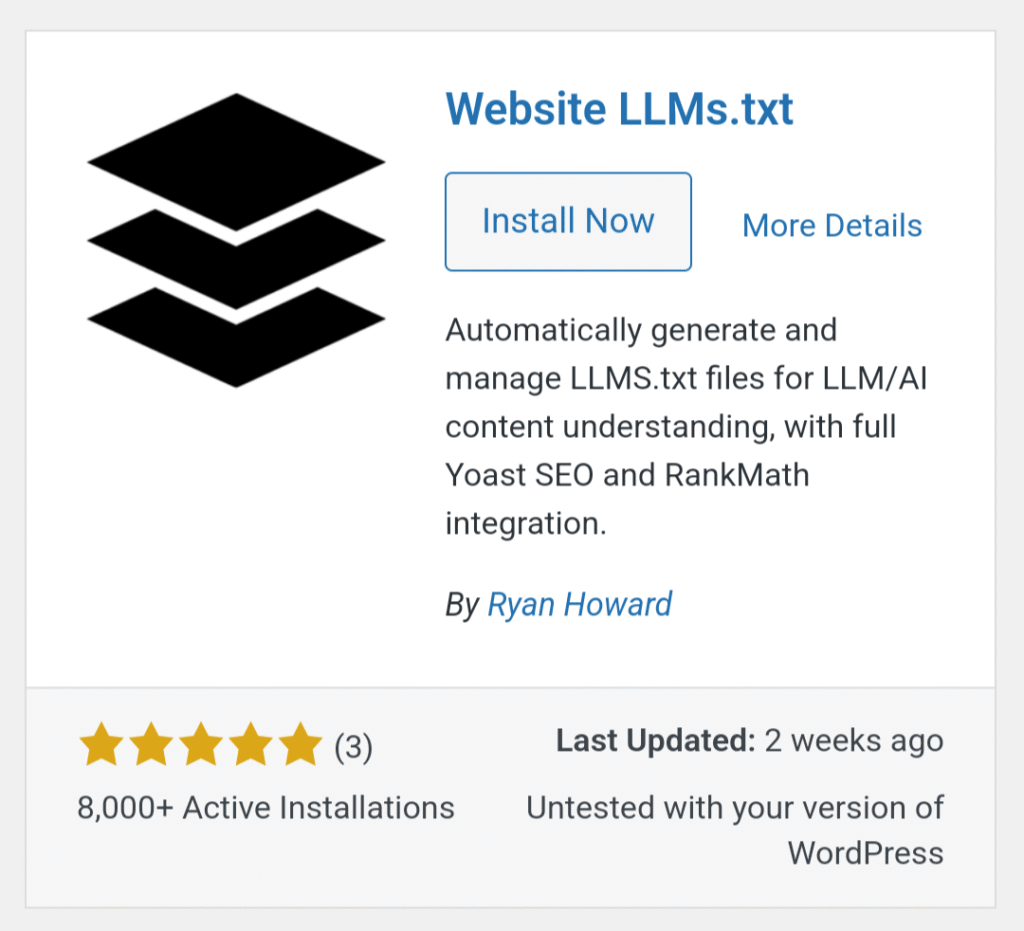

If you are using a mainstream CMS like WordPress, some plugins can both generate the file and automatically place it in the directory.

⚠️ However… trying to oversimplify this process without a proper strategy can be pointless or even harmful for many types of sites. Remember; automatically generated content is often shallow, repetitive, and lacks authenticity.

Alternative Approaches

Various approaches have emerged to address the related problems:

- Segmentation technique and model development: Developing models that can filter out irrelevant content to help LLMs better understand HTML structures.

- JavaScript execution environments: Developing custom browser-like execution environments to enable LLMs to access JavaScript content.

- DOM (Document Object Model) understanding: Efforts aimed at helping LLMs understand not only text-based HTML but also the DOM structure of a web page. The goal is for the LLM to gain more information about the visual layout and relationships of content.

- Enhancing attention mechanisms: Through advanced attention mechanisms and larger training datasets, the aim is for LLMs to better focus on important information in the text and understand context more effectively.

- Custom data preprocessing techniques: Developing specialized data preprocessing methods such as cleaning up ads, removing unnecessary HTML tags, and leaving only the main content to make web data more suitable for LLMs.

Jeremy Howard’s proposed llms.txt standard is a result of efforts to develop such custom data preprocessing techniques.

It eliminates the need for complex HTML parsing and noisy data cleaning by allowing website owners to provide a preprocessed, clean version containing only the main text content.

This is, in a way, an effective preprocessing step done on the website side.

How Does It Differ From Other Approaches?

The factors that allow llms.txt to stand out clearly from other approaches are:

- If widely adopted by websites, it could significantly reduce the need for advanced parsing techniques.

- It does not offer a solution for understanding the DOM structure. Instead, it eliminates DOM complexity by directly focusing on text content.

- It does not directly enhance LLMs’ attention mechanisms or contextual understanding abilities. However, by providing cleaner and more focused data, it can help these mechanisms function more effectively.

🗒️ In summary: If it becomes widespread, it carries the mission of reducing the need for advanced parsing techniques.

Current Standards vs. the llms.txt Proposal

llms.txt is designed to coexist with existing web standards.

For example, it can complement robots.txt by providing context for allowed content.

The file can also reference the structured data markup used on the site and help LLMs understand how to interpret this information in context.

The following table makes it easier to better understand how it differs from other standards:

| Standard | robots.txt | sitemap.xml | llms.txt |

| The main purpose | It specifies which pages automated tools can access on the site | It lists all indexable, human-readable pages on the site | It provides a permitted, contextual summary of the site’s content for LLMs |

| Target user | Web crawler bots (e.g., Googlebot, Bingbot) | Search engines (for SEO purposes) | LLMs (Large Language Models), and consequently answer engines (ChatGPT, CoPilot, Perplexity, etc.); for AEO purposes |

| Content type | Access permissions and restrictions (Allow, Disallow) | List of page URLs | Allowed content, structured data references, and semantic context for LLMs |

| File path | /robots.txt | /sitemap.xml | /llms.txt |

| Usage | To check access permissions before crawling the site | To index the site and quickly access all pages | To help the LLM provide accurate information when responding to user queries |

| Does it give context? | No (only allow/disallow information) | No (only a list of URLs) | Yes (it provides context on how to interpret the content and structured data references) |

| External links | Does not include | May include, but they are generally internal links | May refer to external URLs if necessary to understand the information |

| Size/density | Very small (typically a few lines) | Can be large (lists a large number of pages) | Medium-sized (optimized to fit within LLMs’ context window) |

| Use case | To specify how search engines or other bots should behave on the site | To enable search engines to crawl the site more easily and comprehensively | To help LLMs use the site’s content more meaningfully and accurately (especially for inference) |

| Use for education | No | No | Although its main goal is to support inference, it may also serve as training data down the line |

| Can it be used as an alternative to the other? | No | No | No; it doesn’t replace sitemap.xml, it’s complementary |

Potential Limitations and Challenges of llms.txt

This proposed standard naturally brings some potential limitations and challenges:

- Requirement for widespread adoption: For the standard to work, it needs to be widely adopted by website owners. Currently, there is no widespread implementation.

- Does not fully solve the dynamic content problem: If the main content of a web page is largely generated by JavaScript, the

llms.txtfile may not fully reflect this content.

- Lack of visual and other multimedia content: Since llms.txt only contains text, it does not provide information about images, videos, or other multimedia content. Thus, it does not eliminate the need for JavaScript execution environments.

Common Criticisms Towards llms.txt

Some argue that llms.txt does not deserve the importance attributed to it, using the following arguments:

1. Lack of Support from Major Players: A Key Obstacle to Standard Adoption

The fact that major players such as Anthropic, OpenAI, and Google have not yet announced support for this standard poses a serious barrier to its widespread adoption and effectiveness.

🟩 AEO Radar Note: Anthropic’s use of this standard on its own site refutes part of this criticism. However, the fact that OpenAI and Google have not yet expressed support still leaves a question mark regarding its widespread adoption.

2. Questioning the Need for LLMs.txt When Web Access Exists

If a bot can already access and analyze a website’s content, why would an additional llms.txt file be necessary? If the bot still needs to check other content to determine if it’s spam, the added step’s benefits become unclear.

🟩 AEO Radar Note: IDEs like Cursor and Windsurf or applications like Claude can use llms.txt files to gather context for tasks, and some of them are already doing so. These applications read and process URLs from llms.txt files and present the relevant content to LLMs, significantly countering this criticism.

3. Potential for Publisher Misuse: The Risk of Presenting Different Content

The potential for publishers to misuse llms.txt by presenting different sets of content to AI agents versus users/answer engines raises serious concerns.

🟩 AEO Radar Note: The llms.txt file simply specifies which URLs AI agents can or cannot access; it is technically a neutral tool. It does not carry content itself, only rules (allow, disallow, etc.).

However, the mentioned risk is real: if a publisher intentionally shows different content to AI agents and users, this constitutes server-side content manipulation. This is known as cloaking and is independent of llms.txt. The problem arises not from the markdown file but from server behavior.

Thus, the question of how to mitigate the risk known as “AI cloaking” remains important, for example through AI bot content consistency checks, integrity audits, and community norms.

👇 Think of it like this…

A traffic light is installed. 🚦

The light works properly; the rules are clear. But if drivers deliberately run the red light, the problem is not with the light but with the drivers. Nevertheless, people might eventually say, “We can’t trust this light, let’s set up a different system.”

The situation is exactly like that; if misuse spreads, trust in llms.txt diminishes: “llms.txt compliance” ceases to be a quality signal and starts being perceived as a manipulation tool.

However, if it becomes a real industry standard, criticisms toward llms.txt could decrease, and the system might work more flawlessly.

4. The Potential for llms.txt to Share a Similar Fate with Keywords Meta Tags

Some compare it to keywords meta tags. Once important for SEO, these tags lost relevance due to their potential for abuse and because search engines developed better content understanding capabilities.

Similarly, there is a risk that llms.txt might share the same fate. If answer engines prefer relying on their own content analysis methods instead of trusting this standard, the practical value of llms.txt may diminish.

🟩 AEO Radar Note: In projects like Langchain’s GitHub repositories, there are examples and tools for reading and processing llms.txt files.

This shows developer efforts to integrate these files into LLM workflows. If leading AI companies find llms.txt useful, it suggests the standard may not end up being a completely useless meta tag. Yet, if widespread adoption does not occur, this comparison could remain valid.

🔑 Key takeaway: The fact that some major companies are using and integrating llms.txt into their workflows demonstrates the standard’s potential value and shows that at least some key players embrace it.

This undermines the “nobody is using it” claim. However, other criticisms (lack of widespread adoption, necessity debates, and misuse risks) remain valid. The future of llms.txt depends on whether other major AI services adopt the standard and how potential misuse is prevented.

Final Thoughts: A Simpler Way Forward for Answer Engines

The llms.txt file is a structured solution designed to simplify how answer engines access, parse, and use web content.

🅿️ Think of drivers looking for the perfect parking spot in a lot. Without clear and consistent signage, they would have to drive row by row, straining their eyes in the hope of finding an empty spot.

Frustrating.

But this standard proposal is like adding special signs for drivers. It allows them to reach their destination without having to navigate complex HTML structures, ads, and irrelevant content.

Of course, no solution is without its challenges. Some criticisms remain that could keep this proposal from becoming a widespread standard. That’s why it’s worth keeping an eye on how things evolve from here.

Taking Action: What’s Next for Developers and Brands

- Define an AEO strategy: Create and identify the content you want to be featured in answer engines.

- Generate and add

llms.txt: Produce a file that complies with the standards outlined in our article and add it to the root directory of your site.

- Publish in two formats: Automatically generate Markdown along with HTML. Tools like nbdev can simplify this process.

- Test: Use tools like LangChain and LlamaIndex to test how LLM models process your content. Monitor your content’s visibility in answer engines with AEO tools and refine your strategy.